In Deepfakes and Cheap Fakes, Data & Society Affiliates Britt Paris and Joan Donovan trace decades of audiovisual (AV) manipulation to demonstrate how evolving technologies aid consolidations of power in society. Deepfakes, they find, are no new threat to democracy.

Deepfakes and Cheap Fakes

The Manipulation of Audio and Visual Evidence

Britt Paris,

Joan Donovan

Report Summary

— Britt Paris and Joan Donovan

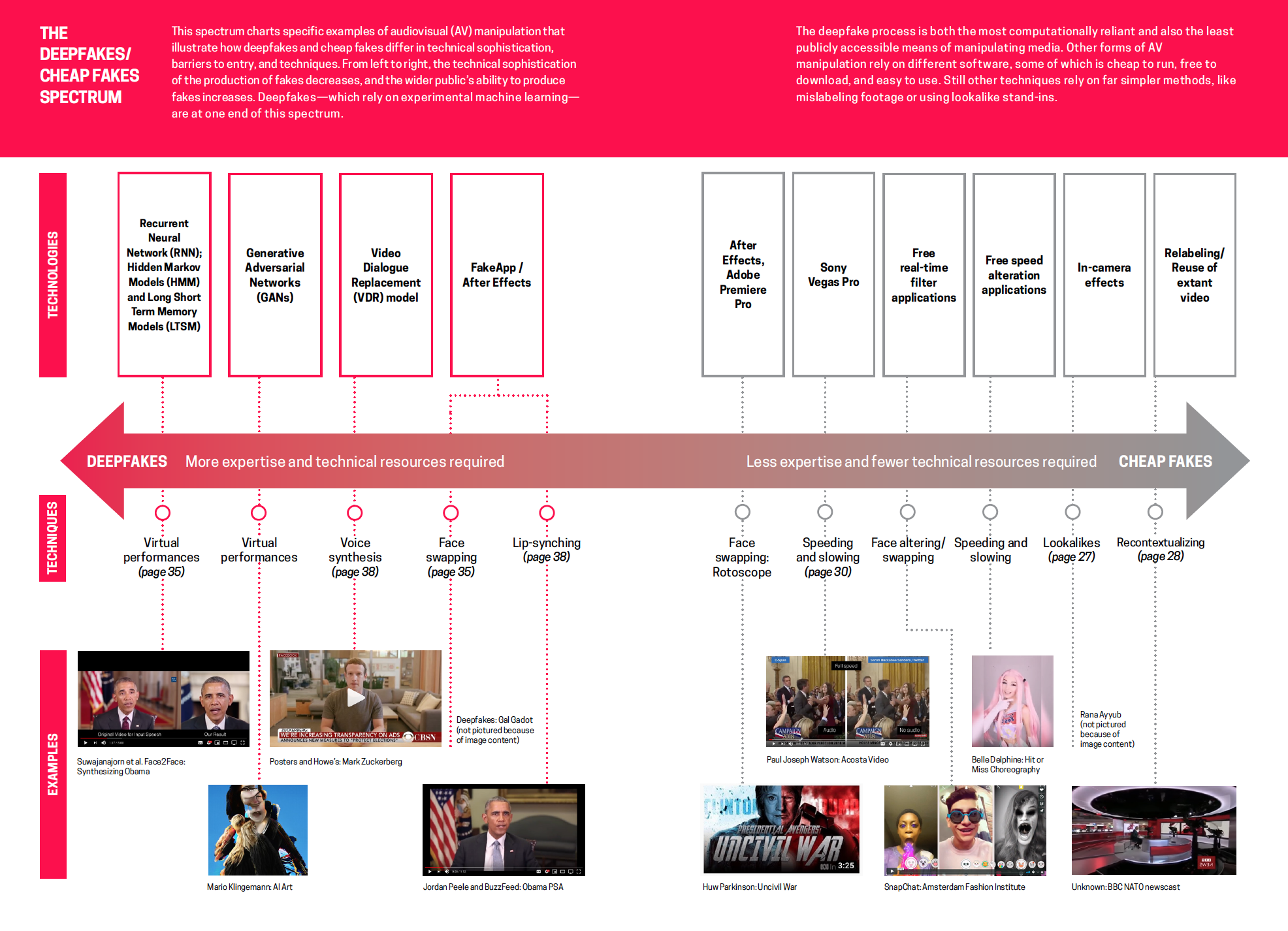

Coining the term “cheap fakes,” Paris and Donovan demonstrate that the creation of successfully deceptive media has never necessarily required advanced processing technologies, such as today’s machine learning tools. A “deepfake” is a video that has been altered through some form of machine learning to “hybridize or generate human bodies and faces,” whereas a “cheap fake” is an AV manipulation created with cheaper, more accessible software (or, none at all). Cheap fakes can be rendered through Photoshop, lookalikes, re-contextualizing footage, speeding, or slowing.

Thanks to social media, both kinds of AV manipulation can now be spread at unprecedented speeds. For a spectrum diagram, click here.

Like many past media technologies, deepfakes and cheap fakes have jolted traditional rules around evidence and truth, and trusted institutions must step in to redefine those boundaries. This process, however, risks a select few experts gaining “juridical, economic, or discursive power,” thus further entrenching social, political, and cultural hierarchies. Those without the power to negotiate truth–including people of color, women, and the LGBTQA+ community–will be left vulnerable to increased harms, say the authors.

Paris and Donovan argue that we need more than an exclusively technological approach to address the threats of deep and cheap fakes. Any solution must take into account both the history of evidence and the “social processes that produce truth” so that the power of expertise does not lie only in the hands of a few and reinforce structural inequality, but rather, is distributed amongst at risk-communities. “Media requires social work for it to be considered as evidence,” they write.

By using lookalike stand-ins, or relabeling footage of one event as another, media creators can easily manipulate an audience’s interpretations.